Man-made intelligence's consequences for information laborers and specialists.

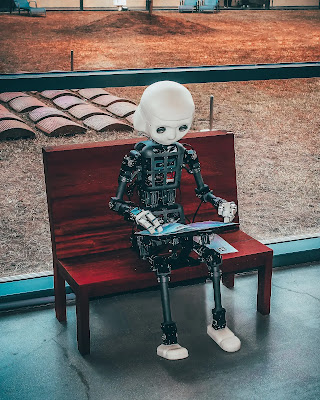

Innovation has permanently changed work markets, taking out certain positions while making others, from steam power and power to PCs and the web. Indeed, even while the expression "man-made consciousness" is to some degree misdirecting (the most skillful PC frameworks actually know nothing), the innovation has arrived at tipping direct where it is prepared to influence new occupations, including craftsmen and information laborers.

PCs can now orchestrate human-sounding composed language and change graphic sentences into practical symbolism as a result of the improvement of enormous language models, and simulated intelligence frameworks that are prepared on monstrous measures of a message. The Discussion asked five man-made reasoning analysts to examine how gigantic language models are supposed to impact creatives and information laborers.

Moreover, as our specialists brought up, innovation is nowhere near great, which creates various issues that influence human laborers, like deception and counterfeiting.

Here is a list of each response so you can skip ahead to it:

All people are creative, but are abilities being lost?

All individuals presently approach innovative and information work thanks to enormous language models. Anybody with a web association may now articulate one's thoughts and figure out huge measures of data by utilizing programs like ChatGPT or DALL-E 2, which permit clients to make text synopses, for instance.

The degree of humanlike ability that gigantic language models show is especially vital. The degree of value that is commonly credited to human specialists can be accomplished by fledglings in only a couple of moments while making representations for their business introductions, showcasing pitches, getting thoughts to move past creative slump, or making new PC code to complete explicit capabilities.

Obviously, these new man-made intelligence apparatuses can't understand minds. To deliver the results the human client is searching for, a novel yet less complicated sort of human imagination is expected as text prompts. The simulated intelligence framework produces progressive rounds of results utilizing iterative inciting, an illustration of human-computer-based intelligence coordinated effort until the individual creating the prompts is happy with the results.

Opening up the realm of creative and information work to everyone has many advantages, but these new AI tools also have drawbacks. First, they might hasten the decline of critical human abilities that will still be crucial in the future, particularly writing abilities.

Possible Biases, Errors, and Plagiarism

I habitually use GitHub Copilot, a program that works with the composition of PC code, and I've wasted various hours trying different things with ChatGPT and other text-producing simulated intelligence devices. These apparatuses, in my experience, function admirably for looking at ideas that I haven't recently thought of.

They can help me come up with fresh ideas to better the flow of my thoughts or come up with solutions using programs I wasn't aware existed. Once I see the output that these tools produce, I can assess their quality and make extensive edits.

Yet, I have a couple of doubts.

Their little and enormous errors present one bunch of issues. With Copilot and ChatGPT, I'm continuously verifying whether ideas are too shallow, like ineffectual text or code, or results that are only absolutely inaccurate, like mistaken relationships or ends, or code that doesn't work. Clients risk involving these devices in an impeding manner if they don't practice decisive reasoning.

Bias is another issue. The biases in the data can be learned and replicated by language models. These biases are difficult to spot in text production, but they are quite obvious in models for image generation. Although ChatGPT's developers, OpenAI, have been rather cautious about what the model will reply to, users frequently find ways around these boundaries.

The issue of plagiarism is another. Recent studies have demonstrated that picture creation programs frequently steal ideas from other people's works. Does ChatGPT experience the same thing?

Given their potential, these tools are still in their infancy. I think there are workarounds for their present constraints for the time being. Tools might, for instance, apply current techniques to find and eliminate biases from extensive language models, fact-check generated content against knowledge sets, and put the findings through more advanced plagiarism detection software.

Humans will be overtaken, leaving only specialized and "handmade" jobs.

People love to consider themselves novel, yet science and innovation have reliably disproven this idea. Science has shown that different creatures participate in all of the ways of behaving long remembered to be elite to people, including the utilization of apparatuses, the arrangement of groups, and the spread of culture.

In the meantime, contentions that human minds are vital for mental undertakings have been disproven individually by innovation. In 1623, the first calculator was made. A PC-created piece of workmanship won a craftsmanship contest a year ago. I think we are moving toward peculiarity, which is when PCs outperform human knowledge.

Of course, there are shades of grey in this issue. In many fields, humans will only make up a small portion of the workforce while computers will handle the majority of the job. Consider the manufacturing industry. Although many tasks are now handled by robots, there is still a market for handcrafted goods.

If history is any indication, it's practically a given that technological advancements in AI will result in the loss of more employment, the affluence and scarcity of individuals with human-only abilities in the creative class, and the rise of the new mega-rich among those who possess creative technologies.

New jobs will come in to replace the old ones.

Huge language models are profoundly evolved machines for finishing groupings: Give one a series of words ("I might want to eat an apple"), and it will probably finish the sentence with "apple." Many individuals, including numerous simulated intelligence specialists, have been frightened by how precise, broad, adaptable, and delicate the fulfillments delivered by enormous language models like ChatGPT that have been prepared on record-breaking amounts of words (trillions) are.

Like any potent new technology, it will have an impact on people who provide that talent in the market. In this case, it automates the creation of intelligible, if somewhat generic, prose. It is helpful to consider the effects of the introduction of word-processing software in the early 1980s to imagine what might occur.

Additionally, previously unimaginable occupations and talents emerged, such as the frequently listed résumé skill MS Office. And the demand for premium document production continued, expanding in capability, sophistication, and specialization.

You won't have to request that others concoct significant, general text any longer because gigantic language models will most likely follow a similar example. Enormous language models, nonetheless, will open up new roads for work and make places that haven't even been considered at this point.

Consider just three of the areas where enormous language models miss the mark to show this. Most importantly, making a brief that creates the expected outcomes can require a lot of (human) craftiness. A little change in the brief can immensely affect the result.

- First of all, creating a prompt that produces the intended results can need quite a bit of (human) cunningness. A small modification in the prompt can have a big impact on the output.

- Second, enormous language models can possibly give improper or silly results without earlier notification.

- Third, enormous language models don't seem to have unique, general information on what is valid or bogus, what is correct or wrong, or what is simply the presence of the mind, as per computer-based intelligence specialists. Outstandingly, they can't perform extremely fundamental math. This implies that their outcomes could emerge to be outrightly false, irrational, biased, or deceiving.

In conclusion, although massive language models will undoubtedly cause upheaval for creative and knowledge workers, there are still a lot of exciting new prospects in store for individuals who are prepared to embrace and incorporate these potent new tools.

Technological Advances Produce New Skills.

Information work is the same as different kinds of work in the way it is modified by innovation. Science and medication have seen massive change throughout recent a very long time because of rapidly progressing sub-atomic portrayal, for example, fast, minimal-expense DNA sequencing, and the digitization of medication using applications, telemedicine, and information investigation.

Innovation has taken a few greater steps than others. During the beginning of the Internet, Hurray utilized human caretakers to list new material. The rise of calculations that focused on results given the information found in web connecting designs on a very basic level changed the hunt scene and upset how people presently track down data.

A further step has been made with the accessibility of OpenAI's ChatGPT. The current colossal language model enhanced for visits is enveloped by an exceptionally instinctive connection point by ChatGPT. It gives individuals admittance to 10 years of rapidly progressing man-made brainpower. This program shows clients how to address average challenges in client-chose language styles and can produce satisfactory introductory letters.

The capacity to make prompts and brief layouts that produce positive results will be critical to come by the best outcomes from language models, similar to how the capacity for acquiring data on the web changed when Google went along.

There are a few distinct prompts for the introductory letter model. Rather than "Compose an introductory letter for a situation as an information section subject matter expert," the more broad result would be "Compose an introductory letter for a task." By gluing components of the set of working responsibilities, a list of references, and definite directions, the client could make significantly more specific prompts.

In the time of broadly accessible artificial intelligence models, human communication with the world will modify, as it has with numerous other mechanical turns of events. The issue is whether society will make the most of this chance to advance value or enlarge holes.

_11zon.png)

No comments:

Post a Comment